Demystifying High Dynamic Range (HDR) and Wide Color Gamut (WCG)

By Alan C. Brawn CTS, ISF-C, DSCE, DSDE, DSNE, DCME

In the world of display technologies, one of the topics currently on everyone’s mind is high dynamic range, or HDR. There is a lot of misinformation disseminated about HDR, what it is, and what it does. This white paper will begin by defining what HDR really is, and then delve into the key technical elements of HDR and WCG and how it affects what we see on a display.

Sidenote: If you would prefer “just the basics” of HDR and WCG—perhaps you’re a consumer or an integrator who needs to explain, briefly, what these terms mean and how they affect the technology experience—please click over to this post, HDR and WCG: Just the Basics.

What Is High Dynamic Range?

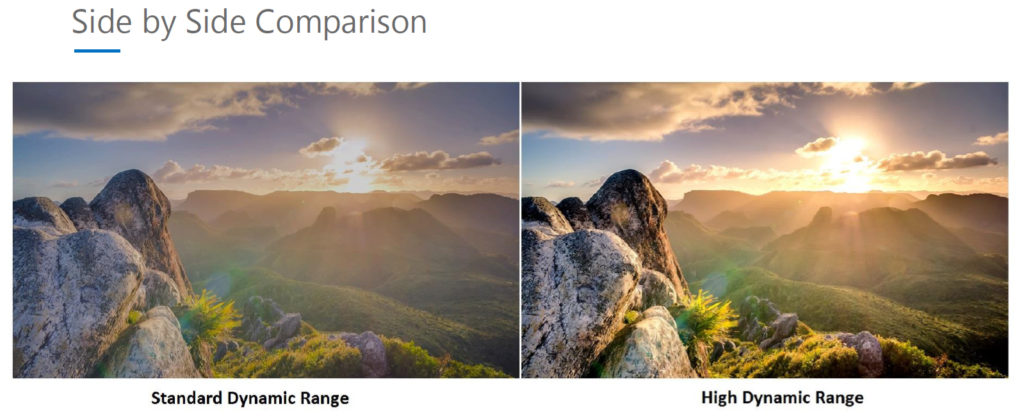

In terms of images – for still cameras, High Dynamic Range (HDR) uses layered photos of the same scene, taken with different exposures. This technique is done in post-processing, or even automatically inside some camera models. It allows a photo to capture what we can see more accurately. However, when dealing with displays, it’s all about improving the range of contrast between the darker and brighter parts of a scene. When presented with compatible content, displays with HDR can produce a wider range from black to white (aka. grayscale), so you can see more details in the very darkest and brightest areas of the picture. You’ll also see highlights, which are moments of brightness that appear on illuminated objects, such as the reflections off a shiny surface like a chrome car bumper. Without HDR, those highlights wouldn’t be any brighter than other bright objects in the scene. HDR displays are indeed overall brighter… but it isn’t just about overall brightness. Fundamentally, HDR is about being able to provide the necessary higher levels of peak brightness when the scene calls for it.

HDR is an end-to-end technology. That means source video needs to be created or captured containing HDR level color and brightness ranges; the distribution method needs to retain all the extra HDR brightness and color information; and the display device must be capable of reading and managing the HDR data and have sufficient brightness and color capabilities to deliver HDR’s picture quality benefits.

Why High Dynamic Range Matters to Viewers

The exciting part of HDR (as an overall concept) is that it takes us to the next level of image fidelity in video source materials, and the enhanced capabilities of the displays that can reproduce what the source material contains. In the process, HDR addresses how to approach what the human eye can actually see.

Overall, dynamic range describes the measurement between maximum and minimum values. For our purposes, we can interpret dynamic range as the measurement between the brightest whites and the darkest blacks in an image, or the lowest and highest values of luminance. Let’s begin with standard dynamic range video or SDR for short.

Standard-dynamic-range video describes images/rendering/video using a conventional gamma curve. Gamma is the relationship of how bright an image is at any input level. Gamma is a nonlinear operation used to code and decode brightness values in both still and moving imagery. It is used to define how the numerical value of a pixel relates to its actual brightness. The conventional gamma curve was based on the limits of the original cathode ray tube (CRT), which allows for a maximum luminance of 100 cd/m2 or nits. This remains as the reference evaluation of other gamma curves that we will be looking at.

Older CRT technology had a maximum luminance of 100 cd/m2

Older CRT technology had a maximum luminance of 100 cd/m2

The dynamic range that can be perceived by the human eye is approximately 14 f-stops (luminance perception/recognition) depending upon the individual. SDR video with a conventional gamma curve and a bit depth of 8-bits per sample has a dynamic range of about 6 stops. Professional SDR video with a bit depth of 10-bits per sample has a dynamic range of about 10 stops. Conventional gamma curves include Rec. 601 and Rec. 709. When we speak of HDR video it has a dynamic range greater than SDR video. When HDR content is displayed on a 2,000-nit display with a bit depth of 10-bits per sample it has a dynamic range of 200,000:1 or 17.6 stops, a range not offered by previous displays.

HDR “Standards”

There are multiple formats in HDR and several video interfaces that support at least one HDR format. We will reference the evolution of HDMI as an example of the most common interface. HDMI 2.0a addressed the first developments in HDR and was released on April 8, 2015. In December 2016, HDMI announced that Hybrid Log-Gamma (HLG) support had been added to the HDMI 2.0b standard. HDMI 2.1 was officially announced in January of 2017 and added support for Dynamic HDR which is the inclusion of dynamic metadata that allows for changes on a scene-by-scene or frame-by-frame basis.

SMPTE Standards that Address HDR

- SMPTE ST 2084

- It officially defines the Perceptual Quantizer (PQ) non-linear electro-optical transfer function (EOTF) curve for translating a set of 10 bit or 12 bits per channel digital values into a brightness range of 0.0001 up to 10,000 nits. SMPTE ST.2084 provides the basis for HDR 10 Media Profile and Dolby Vision implementation standards.

- SMPTE 2086

- Specifies the metadata items to specify the color volume (the color primaries, white point, and luminance range) of the display that was used in mastering video content. The metadata is specified as a set of values independent of any specific digital representation.

- SMPTE 2094

- SMPTE ST 2094 is the Dynamic Metadata for Color Volume Transform (DMCVT) standard. It was published in 2016 as six parts and includes four applications from Dolby, Philips, Samsung, and Technicolor.

- Note on EOTF and Metadata:

- According to Tektronix: “HDR provides a means by which to describe and protect the content creator’s intentions via metadata. It contains (in essence) a language used by the content creator to instruct the decoder. HDR provides metadata about how content was created to a display device in an organized fashion such that the display can maximize its own capabilities. As displays evolve, HDR will allow existing devices to always make a best effort in rendering images rather than running up against unworkable limitations.”

- “A formula called the electro-optical transfer function (EOTF) has been introduced to replace the CRT’s gamma curve. Some engineers refer to EOTF more simply as perceptual quality, or PQ. Whatever the name, it offers a far more granular way of presenting the luminance mapping according to the directions given by the content creator. EOTF is a part of the High Efficiency Video Coding (HEVC) standard.”

HDR Standards

- HDR10

- HDR10 is the most prolific standard and the base for other standards. It is an open standard, royalty free, and supported by a wide variety of companies, which includes monitor and display manufacturers such as Samsung, Dell, LG, Sharp, Sony, and Vizio, as well as Microsoft and Sony Interactive Entertainment.

- HDR10 Media Profile aka HDR10, uses the wide-gamut Rec. 2020 color space, a bit depth of 10-bits, and the SMPTE ST 2084 (PQ) transfer function. It also uses SMPTE ST 2086 “Mastering Display Color Volume” static metadata to send color calibration data of the mastering display.

- HDR10+

- HDR10+ was announced in April of 2017, pioneered by Samsung and Amazon Video. HDR10+ updates HDR10 by adding dynamic metadata to its 10-bit color depth. It is referred to it as Dynamic Tone Mapping. The dynamic metadata is based on Samsung’s application of SMPTE ST 2094-40. The dynamic metadata is additional data that can be used to more accurately adjust brightness levels on a scene-by-scene or frame-by-frame basis. HDR10+ is also an open standard and is royalty-free. A key HDR10+ advantage is backward compatibility with TVs and other consumer devices incorporating HDR10 decoders. The intent is to get the widest possible adoption of HDR10+ so that only one HDR grade of a film is required across all or most distribution platforms to be displayed on as many displays as possible.

- Dolby Vision

- Dolby Vision is a proprietary HDR format from Dolby Laboratories. It includes the Perceptual Quantizer (SMPTE ST 2084) electro-optical transfer function, up to 4K resolution, and a wide-gamut color space (Rec. 2020). It has a 12-bit color depth and dynamic metadata and allows up to 10,000-nit maximum brightness (mastered to 4,000nit in practice). It can encode mastering display colorimetry information using static metadata (SMPTE ST 2086) but also provide dynamic metadata (SMPTE ST 2094-10, Dolby format) for each scene. It is not backwards compatible with SDR and requires a royalty.

- Hybrid Log-Gamma

- Hybrid Log-Gamma (HLG) is an HDR standard jointly developed by the BBC and NHK. HLG is designed for cable, satellite and over-the-air TV broadcasts. One benefit is that it requires less bandwidth compared to HDR10+ and Dolby Vision and is backward compatible with SDR although it does require 10-bit color depth. HLG defines a nonlinear electro-optical transfer function (EOTF) and uses the same gamma curve that an SDR signal uses but adds a logarithmic curve with extra brightness over the top of the signal, hence the “log” and “gamma” in the name. The HLG standard is open and thus royalty-free. HLG is defined in ATSC 3.0. HLG is gaining traction because it doesn’t use metadata, eliminating the complexity of adding metadata in real time during the production and broadcasting of live events. Being backward-compatible with SDR, it eliminates the need to transmit separate SDR and HDR versions of the same content broadcast to homes.

- Philips/Technicolor SL-HDR1

- SL-HDR1 is a HDR standard that was jointly developed by Philips, Technicolor, and STMicroelectronics. It provides direct backwards compatibility with SDR by using static SMPTE ST 2086 and dynamic metadata using SMPTE ST 2094-20 to reconstruct a HDR signal from a SDR video stream which can then be delivered using SDR distribution networks and services already in place. SL-HDR1 allows for HDR rendering on HDR devices and SDR rendering on devices using a single layer video stream. The companies’ end-to-end SDR- and multi-standard HDR-distribution solution is part of a technology bundle called Advanced HDR by Technicolor, which also live converts SDR video to HDR at the set-top box or head end.

Wide Color Gamut: What You Need To Know

In concert with HDR increased range of luminance, wide color gamut is the introduction of an increased color space. This provides a much larger palette of color so to work with. It more closely replicates what the human eye can see.

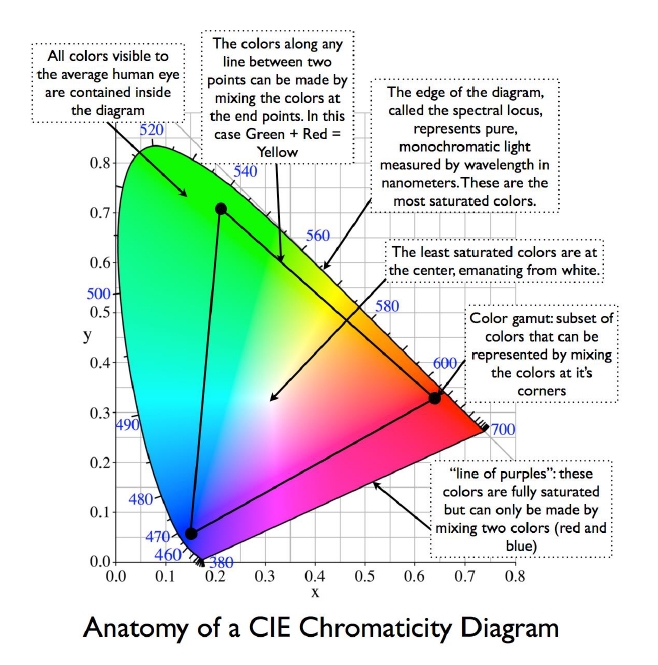

A colorspace is a standard that defines a specific range of colors that a given technology can display, with maximum red, green and blue points, mapped to sit inside the full CIE XYZ space (see below). The space within the full CIE XYZ space that a colorspace covers is called its gamut. No three points on the chart can cover 100% of what the human eye can see.

All imaging-based applications need a specific, well-defined color gamut to accurately reproduce the colors in the image content. Over the years this has given rise to many different standard color gamuts for the current image content, and they have generally been based on what the currently existing displays at the time could produce. Both the displays and content have evolved together over time, and many distinct color gamuts have been defined, but they are not all created equal.

What makes a color gamut an industry standard is the existence of a significant amount of content created specifically for that gamut. This necessitates manufacturers to include that standard in their products. Content creators and the content they produce defines a true color gamut standard. It is then up to the display to deliver it as accurately as possible on-screen.

Viewers tend to think of color gamuts in terms of their most saturated colors, but according to Dr. Soneira, the greatest percentage of content is found in the “interior regions of the gamut, so it is particularly important that all of the interior less saturated colors within the gamut be accurately reproduced.”

Since the introduction of HDTV and the expansion of internet content, the sRGB / Rec. 709 color gamut standard has been used for producing virtually all content for television, the internet, and digital photography. Since the source material is created based on these standards, if you wanted to see accurate colors as they were created and intended, then the display needed to match the sRGB / Rec.709 standard color gamut. If the display inaccurately replicates the gamut used as the standard, either larger and or smaller, the colors will then appear either over or under-saturated.

Today a display will be evaluated in terms of color reproduction as a percentage of the amount of sRGB/Rec.709 or as we embark upon the future, Rec. 2020 that they produce. Rec. 709 is the international recognized standard video color space for HDTV, with a gamut almost identical to sRGB.

For broadcast it is defined in 8-bit depth, where black is level 16, and white is level 235. 10-bit systems are common in post-production, as typically the source from camera is of a much wider gamut and bit depth than Rec. 709.

DCI-P3 is a wide gamut video color space introduced by SMPTE (Society of Motion Picture and Television Engineers) for digital cinema projection. It is designed to closely match the full gamut of color motion picture film. It is not a consumer standard and is only used for content destined for digital theatrical projection. Few monitors can display the full DCI gamut, but DCI spec projectors can.

The newest generation standard color gamut is the impressively large Rec. 2020 standard. It is a significant 72% larger than sRGB / Rec. 709 and 37% larger than DCI-P3. The color gamut is extremely wide and the color saturation extremely high.

When a display needs to support one or more additional color gamuts like sRGB / Rec. 709 that are smaller than its native color gamut, that can be accomplished with digital color management performed by the firmware, CPU, or GPU for the display. The digital RGB values for each pixel in an image being displayed are first mathematically transformed so they colorimetrically move to the appropriate lower saturation colors closer to the white point. The available color gamuts can either be selected manually by the user, or automatically switched if the content being displayed has an internal tag that specifies its native color gamut, and that tag is recognized by the display’s firmware.

Sorting Out the HDR Standards – Where Are We Today?

HDR10 has established itself as the basic and most widespread HDR standard. It masters content at 1,000 nits. The limitations of HDR10 is the static or fixed metadata. Therefore, if the movie is quite bright or dark it must be encoded as such and this affects the overall image. While a vast improvement over SDR, this has left room for more premium HDR standard options.

Dolby Vision is leading the way as the enhanced quality version of HDR that can also play back the HDR10 standard. It masters content at 4,000 nits with the upside in the future being 10,000 nits. The major advantage that Dolby Vision has over HDR10 is Dynamic Metadata which is the ability to vary the brightness levels on a scene by scene or even frame by frame basis. It also has the advantage of being based on a 12-bit color processor.

HDR10+ seeks to close the gap on Dolby Vision by adding Dynamic Metadata capability to the more basic HDR10 standard. HDR10 and HDR10+, on the other hand, have a big advantage over Dolby Vision because they are open standards that display manufacturers can use for free, whereas using Dolby Vision requires royalties to be paid.

Hybrid Log Gamma may well become a significant broadcast HDR standard begging the question as to whether or not we need another HDR standard. To show something in HDR, your display needs to know how to display the signal. Other standards use metadata to tell how to display colors and assign brightness parameters. The problem is that older TVs and ones that don’t support HDR don’t see or know what to do with this metadata.

HLG takes a different approach. Instead of starting with an HDR signal, it begins with a standard dynamic range (SDR) signal that any TV can use. The extra information for HDR rendering is added on, so an HDR TV that knows to look for this information can use it to display a broader range of colors and wider range of brightness. Early on, the BBC and NHK decided against using a metadata-based approach, since metadata could be lost, or in the case of Dolby Vision, which uses a more complicated approach, out of sync with the image on screen, causing colors to display incorrectly. The ability to display on any TV — theoretically even your old non-HD TV — is another bonus for broadcast.

These varying standards all relate to HDR in one form or another. Some displays only address one standard while others address multiple standards. It is impossible to pick an eventual “winner” under a single standard. There are too many variables to consider including the cost of acquisition, bandwidth, cross compatibility, and licensing to name a few. Ultimately it is the ability to address multiple standards and even provide proprietary advances in display performance beyond those standards that makes the difference we will see.